Navigating the Nuances: How Machine Learning Powers Artificial Intelligence

By: The LaunchPad Lab Team / May 14, 2024

Both Machine Learning (ML) and Artificial Intelligence (AI), are closely intertwined (and frequently confused with one another) but ML is actually a subset of AI. While AI is a high-level concept, Machine Learning is the practice of getting to an Artificial Intelligence with code and getting a system to learn from input data. We’re going to review the core concepts behind ML and how to use it.

To get started, we first need to define it. Machine Learning is the way we use algorithms to train a program to learn from input data we provide, so that it can extract features and generate accurate predictions, without being explicitly coded for that. Despite being a little basic and maybe falling a little short (especially nowadays with all the hype around this topic) it fits the needs of this article.

As you can imagine, the more data we provide, the better the prediction we get. And it’s due to that, that AI in general, and ML in particular have grown so rapidly lately. The computing breakthroughs (especially in the field of GPUs and how to use these for faster parallel computing) have paved the way for ML models to be trained with more and more data, which is why generative models (the ones that probably need the most data amongst all the ML models) are doing so well. The GPT-4 model is supposably trained with 1.76 trillion (with a T 🤯) parameters.

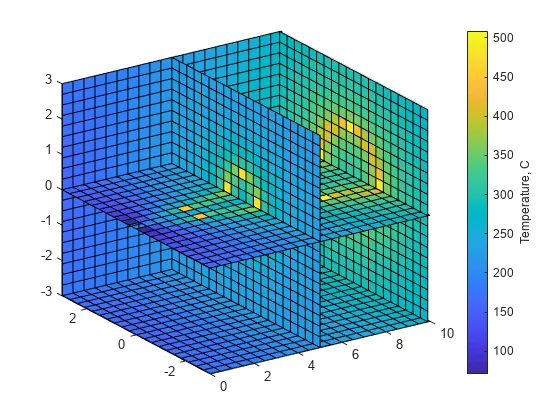

Technically, the way these models work is by applying statistics, probabilities, and some algebra to map high-dimensional datasets into 2 or 3 dimensions. This allows humans to visualize data fully and understand the relationship between different parameters, and how they influence the whole dataset.

We want to go from something like this:

Image extracted from MathWorks

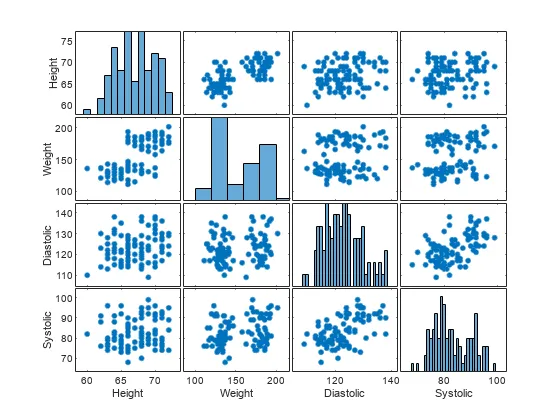

To something like this (just to illustrate a point, these two images have nothing in common with each other):

Image extracted from MathWorks

The final goal is to get to a system that can make predictions for a specific situation given a specific state. The machine creates a model (a mathematical model) that can produce an output based on the patterns that it extracted from the data we provided.

Conceptually, there are three main types of Machine Learning models, supervised and unsupervised learning models, and reinforcement learning, and there are many different algorithms for each:

- Supervised Learning: We act as a teacher to the model, and we tell the machine which is the input-output combination we’re expecting, so through its calculations it can decipher underlying patterns in the data we provided. We refer to this data as labeled data, which is, for example, providing an image of a dog and telling the model — “this is a dog” — and repeat this with a large amount of data. When we’ve trained the model with enough data, it will be able to separate a dog from other animals when being shown one or to predict the price of a used car given its characteristics. With this type of model, we usually get higher accuracy and more versatility (we can use these models for classification, regression, or object detection, among others). On the downside, we rely only on the data we provide, which gives us less flexibility (they tend to be designed for a specific task) and they’re not really good at generalizations. They perform rather poorly when being presented with new unseen data.

Some common algorithms used in this approach are regression analysis, decision trees, k-nearest neighbors, neural networks, and SVMs.

- Unsupervised Learning: We use clusters or groups of similar data points to narrow the dataset, and build relations and patterns between the single entries of the provided dataset. This approach is great for discovering hidden relationships (e.g., Facebook’s people you might know algorithm) and it’s also really good at working with high-dimensional data (think of high-resolution images, that have many layers stacked on top of each other). One caveat is that unsupervised learning algorithms are a little unpredictable since we don’t really know at first sight how the output was built. Also, the noise (how particularities in the distribution of the data points are referred to) in the dataset we provide can create unreliable results. Some algorithms used in this approach are social network analysis, descending dimension algorithms, k-means clustering, and dim/feature reduction.

- Reinforcement Learning: This is an important category of ML algorithms that do well on tasks such as game playing, robotics, or self-driving algorithms. It goes through a set of states from an initial one, and it’s rewarded (or not) at each state. The ML agent should try to either maximize or minimize (depending on what we want) that result when going through all the possible states (we can limit the iterations the agent should take). There is one trade-off we’ll face with these algorithms, which is exploration vs exploitation. This means that we’ll need to balance between finding new moves or states and calculating the reward or yield on each of them. Some useful algorithms used in this approach are Q-learning and deep reinforcement learning.

Now that we’ve established in a broad sense what Machine Learning is, and its differences with Artificial Intelligence at a concept level, we can move on to what is like working with it.

Working With Machine Learning

First of all, we need to define some tools to work with:

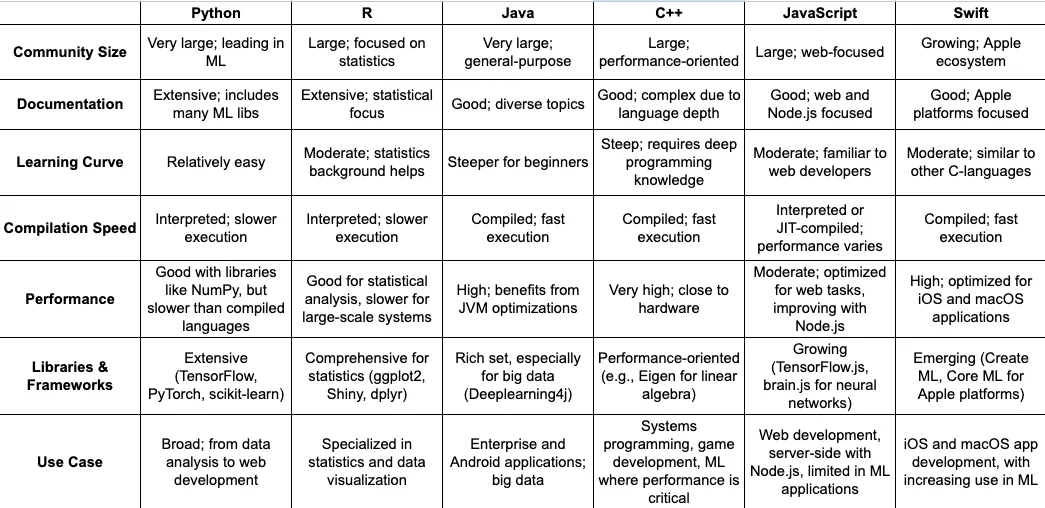

- Programming language: There are many alternatives to use here (practically every programming language that’s out there), but the go-to recommendation is to use Python, due to its large community, reasonable learning curve, and support. Some other alternatives are Java, C/C++/C#, R, Swift, Javascript, and Ruby. Below’s a comparison between them on some relevant topics.

- Data source: You need to define where the data is going to come from. Maybe you already have a database that you want to use here, but if that’s not your case, many websites offer free datasets. One option is https://www.kaggle.com/ where you can get datasets to work with and start learning how to program ML models.

- Algorithm: The algorithm we choose will be closely related to the problem we face and the structure and amount of data provided. For example, we could have a dataset with a few houses for sale in any given city, and their characteristics and we’d need to predict how much the next house will go for. For that particular problem and dataset, we should probably pick among any of the Supervised Learning algorithms mentioned above. Another example would be that we have a huge dataset with spam emails, and we need to build a tool that will detect the next spam email based on the ones we provide. For this particular problem, we could rely on any of the Unsupervised Learning algorithms mentioned earlier, since we need the machine to discover underlying patterns between all the spam emails.

- Infrastructure: To begin working in the field usually a personal computer is more than enough (we can bear a couple of minutes of waiting for a program to run). Once you start scaling, we can either build our own GPU cluster, or we can rely on distributed computing from a provider like Amazon, Google, or Microsoft.

- Code Libraries:

- TensorFlow: Built and maintained by Google, it supports a range of programming languages such as Python, JavaScript, and Swift. It provides most of the algorithms mentioned above, plus a lot of the tools needed to build ML models, like activation functions, and data splitting functions, among others.

- Scikit-Learn: Built by INRIA, made for Python. Much like Tensorflow, it provides practically every ML algorithm out there, and tools to work with them to build a model.

- Numpy: This library is a must if you want to build any Math-related model with Python. It provides practically every math function, especially for working with arrays and matrices. Built by NumFOCUS, made for Python.

- Pandas: This library provides data structures to better handle and transform the datasets we work with. Mostly used to prepare data before we feed it into the model. Also built by NumFOCUS for Python.

- Pytorch: This is the Facebook version of TensorFlow. Really intuitive and easy to use, and provides all the tools to work with ML models.

- Keras: This is a particular library that’s integrated into Tensorflow but that was built independently. It focuses on Neural Networks (mostly used for deep learning algorithms, which are a particular subset of unsupervised learning).

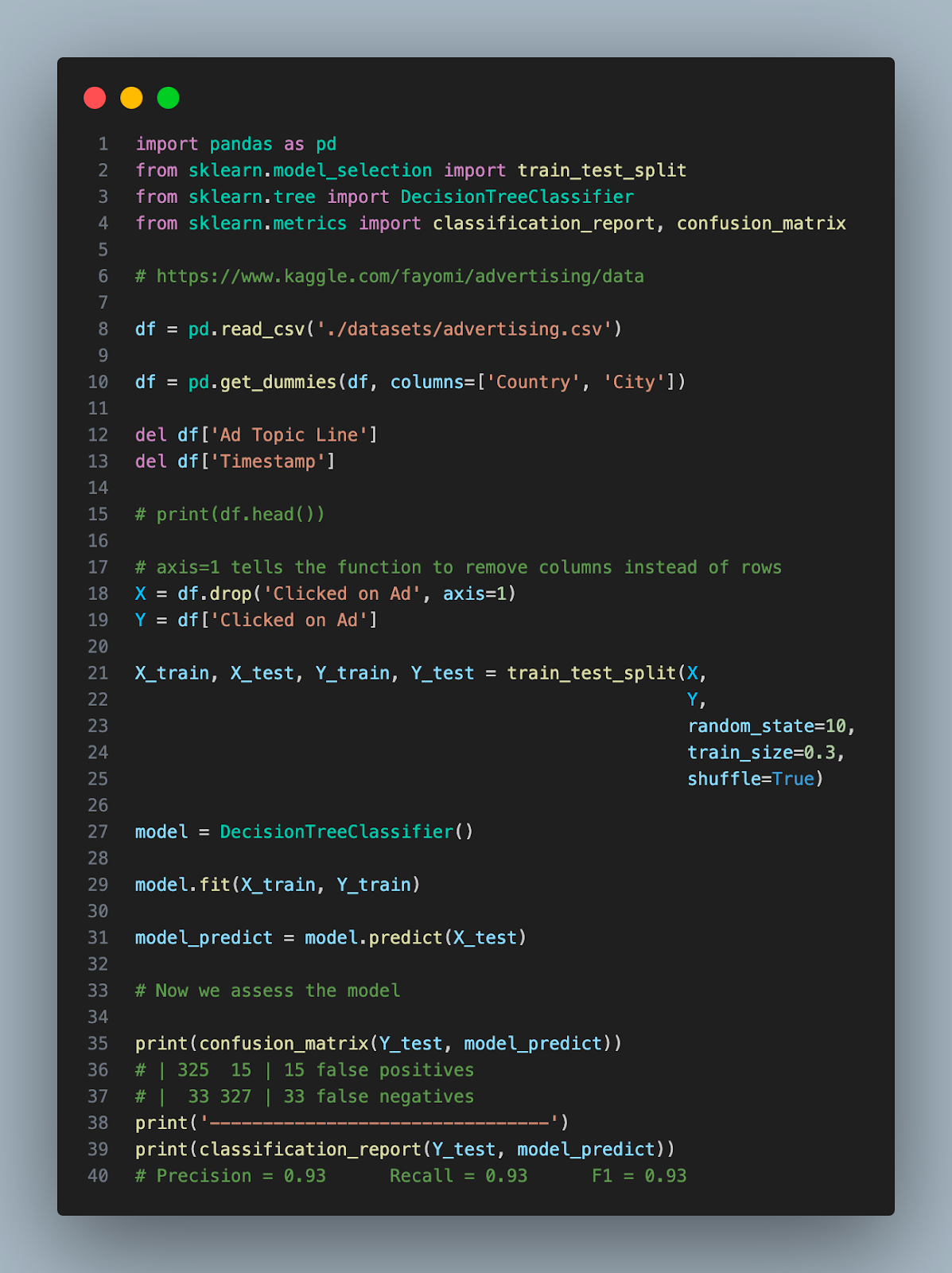

Lastly, I’ll show some code examples for some of the algorithms that we mentioned earlier. I won’t be showing any results, this is just to paint a picture of what the code to build a –simple– model looks like:

Decision Trees Algorithm:

K-Nearest Neighbors Algorithm:

Support Vector Machine Algorithm:

Logistic Regression Algorithm:

Machine Learning Resources

If you want to dig deeper into this topic and start building your own Machine Learning models, here are a few resources I recommend:

- Andrew Ng Machine Learning Course

- Scatterplot Press: Building a House Price Prediction Model

- A free course that teaches you how to build a prediction model.

- Machine Learning for Beginners, Instagram

- Instagram channel with Machine learning-related posts.

- Mathematics for Machine Learning

- This is a book to better understand what goes on behind the scenes.

- “Machine Learning with Random Forests and Decision Trees: A Visual Guide for Beginners”

- This is a book that goes through these patterns and helps visualize how they work.

We can build a parallelism between Machine Learning as a working field, and any other field like medicine or law. Referring to ML would be much like referring to Law as a whole. We have many branches within it and many specializations. As we keep branching out in the ML field, we find there are many more topics to research and work with. On top of that, being that it is actually software, it’s rapidly evolving and growing. Given the topic, I couldn’t wrap up this article without relying on ChatGPT for a punchline, so here it goes:

“As we navigate the expanding landscape of machine learning, it’s clear that our journey is far from over. With each new development, we’re not just coding; we’re crafting the future, piece by piece. So, stay tuned as we continue to explore this ever-changing terrain together, uncovering practical insights and forging new tools in the vast world of ML. There’s so much more to learn, and I can’t wait to see where this path takes us next.”

Ready to Build Something Great?

Partner with us to develop technology to grow your business.